Full Potential of AWS to Elevate - Data Engineering Career Part 2

Welcome back to the blog! If you haven’t had the chance to read Part 1 yet, you can find it here:

Full Potential of AWS to Elevate — Data Engineering Career Part 1

Hello aspiring Data Engineers, Welcome to an exciting journey into the world of data engineering. If you’re here, you’ve already taken the first step towards advancing your career and embracing the transformative power of data.Sai Eshwar's is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscr…

Now, let’s dive into Part 2 of our series, where we’ll explore further insights into maximizing the benefits of AWS for your data engineering career.

I will continue discussing about Infrastructure tools.

e. Data Warehouses:

Data warehouses are specialized databases designed for storing and managing large volumes of structured data from various sources within an organization. They are optimized for analytical queries and reporting, enabling businesses to gain insights from their data.

These repositories are structured to support complex queries and analytics, often involving historical data spanning multiple years. Data warehouses typically use a dimensional modeling approach, organizing data into fact tables (containing metrics) and dimension tables (containing descriptive attributes).

The primary functions of data warehouses include data integration, data cleansing, data transformation, and data storage. They serve as a central repository for consolidated, cleansed, and transformed data, making it easier for analysts and decision-makers to access and analyze information for business intelligence, reporting, and decision-making purposes. The AWS tool for Data Warehouse is AWS Amazon Redshift.

AWS Amazon Redshift: Redshift is AWS’s fully managed data warehouse service, designed for high-performance analytics and data warehousing. With Redshift, data engineers can efficiently query and analyze petabytes of data using familiar SQL syntax, enabling businesses to derive valuable insights from their data with ease.

f. Streaming:

Streaming in data engineering refers to the process of continuously processing and analyzing data as it is generated, rather than waiting for the entire dataset to be collected before processing begins. This approach enables real-time or near-real-time data processing, making it ideal for applications that require immediate insights or actions based on incoming data.

Streaming data can come from various sources, such as IoT devices, sensors, social media feeds, logs, clickstreams, financial transactions, and more. The data is typically processed in small, incremental batches or individual events as they arrive, allowing for low-latency processing and rapid response times.

In data engineering, streaming data processing involves several key components and concepts:

i. Streaming Platforms: Streaming data processing frameworks or platforms, such as Apache Kafka, Apache Flink, Apache Spark Streaming, and Amazon Kinesis, provide the infrastructure for ingesting, processing, and analyzing streaming data at scale.

ii. Ingestion: Streaming data is ingested from source systems into the streaming platform in real-time. This can involve various techniques such as direct integration with source systems, message queuing, or event-driven architectures.

iii. Processing: Once ingested, the streaming data is processed in real-time using stream processing engines. These engines apply transformations, aggregations, filters, and other operations to the data streams to derive insights or perform actions.

iv. Windowing: Windowing allows stream processing to be performed over fixed time intervals or sliding windows of data. This enables calculations such as aggregations over time periods or session-based analysis.

v. State Management: Stream processing often involves maintaining stateful computations, such as maintaining counts, aggregations, or session information over time. State management mechanisms ensure consistency and fault tolerance in distributed stream processing systems.

vi. Output Sink: Processed data is typically sent to output sinks such as databases, data lakes, message queues, or downstream systems for further analysis, visualization, or action.

The Amazon tool for Streaming service is AWS Amazon Kinesis.

AWS Amazon Kinesis: Amazon Kinesis enables real-time processing of streaming data at massive scale. Whether you’re dealing with IoT sensor data, clickstream events, or log data, Kinesis provides the tools to ingest, process, and analyze streaming data in real-time, empowering data engineers to derive actionable insights with minimal latency.

g. NoSQL Databases:

NoSQL databases, also known as “Not Only SQL” databases, are a type of database management system that diverges from the traditional relational database model. They are designed to handle large volumes of unstructured or semi-structured data, offering flexibility, scalability, and high performance for modern applications. The AWS tools that deal with NoSQL Database are AWS Amazon DynamoDB, AWS Amazon DocumentDB.

AWS Amazon DynamoDB: Amazon DynamoDB is an AWS managed NoSQL database service designed to provide seamless scalability, high performance, and low-latency access to data at any scale. It offers a fully managed, server-less architecture, allowing developers to focus on building applications without worrying about infrastructure provisioning, configuration, or maintenance.

Here are some key features and capabilities of AWS Amazon DynamoDB:

i. Fully Managed: DynamoDB is a fully managed service, which means AWS handles the infrastructure management tasks such as hardware provisioning, software patching, replication, backups, and scaling. This enables developers to focus on building applications rather than managing infrastructure.

ii. Scalability: DynamoDB is designed for seamless scalability, allowing you to scale your database up or down based on demand without any downtime or performance impact. It automatically handles the distribution of data and traffic across multiple nodes to ensure consistent performance as your workload grows.

iii. High Performance: DynamoDB provides single-digit millisecond latency for read and write operations, making it suitable for latency-sensitive applications that require fast access to data. It achieves high performance through a distributed architecture and SSD storage.

iv. Flexible Data Model: DynamoDB supports both key-value and document data models, providing flexibility in data modeling. It allows you to store and retrieve structured, semi-structured, or unstructured data using simple API calls.

v. Schema-less Design: DynamoDB is schemaless, meaning you can create tables without specifying a predefined schema. This allows for flexible data schemas and easy schema evolution over time.

vi. Built-in Security: DynamoDB offers built-in security features such as encryption at rest and in transit, fine-grained access control using AWS Identity and Access Management (IAM), and integration with AWS Key Management Service (KMS) for managing encryption keys.

vii. Integration with AWS Ecosystem: DynamoDB seamlessly integrates with other AWS services such as AWS Lambda, Amazon S3, Amazon Kinesis, Amazon EMR, and Amazon Redshift, enabling you to build scalable and fully managed end-to-end solutions.

AWS Amazon DocumentDB: AWS Amazon DocumentDB is a fully managed NoSQL database service compatible with MongoDB workloads. It is designed to provide scalability, performance, and reliability for applications that require flexible document data models.

h. ETL/ ELT/ Data Transformation:

ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) are fundamental processes in data engineering for extracting data from various sources, transforming it into a suitable format, and loading it into a target data storage or data warehouse. These processes play a crucial role in data integration, data migration, and data warehousing initiatives, enabling organizations to derive actionable insights from their data assets. Let’s delve into each process:

Extract:

Extraction involves retrieving data from multiple sources such as databases, files, APIs, streams, or external systems.

Data can be extracted in various formats including structured (e.g., relational databases), semi-structured (e.g., JSON, XML), or unstructured (e.g., text, images).

Extracted data may undergo initial filtering, cleansing, or enrichment to ensure data quality and relevance.

2. Transform:

Transformation involves converting, enriching, and aggregating the extracted data to meet the requirements of the target system or analytics use cases.

Transformations may include data cleansing (e.g., removing duplicates, correcting errors), data validation, data normalization, data enrichment (e.g., adding calculated fields, joining with reference data), and data aggregation (e.g., summarizing data at different levels).

Business logic and rules are applied during transformation to derive meaningful insights and prepare the data for analysis or reporting.

3. Load:

Loading involves loading the transformed data into a target storage or destination, such as a data warehouse, data lake, database, or analytical platform.

Loaded data may be stored in a structured format optimized for querying and analysis, enabling efficient retrieval and processing.

Loading processes may include incremental loading (updating only new or changed data), batch loading, or real-time streaming of data depending on the requirements of the application.

In traditional ETL pipelines, data transformation occurs before loading into the target system. However, in ELT pipelines, data is loaded into the target system first, and transformation is performed within the target system using its processing capabilities. ELT is often preferred for big data and cloud-based analytics platforms where data storage and processing are decoupled, enabling scalable and cost-effective data processing.

Data transformation is a critical step in data engineering, facilitating data integration, data quality management, and analytics. It enables organizations to extract maximum value from their data assets by preparing data for analysis, reporting, machine learning, and other data-driven initiatives.

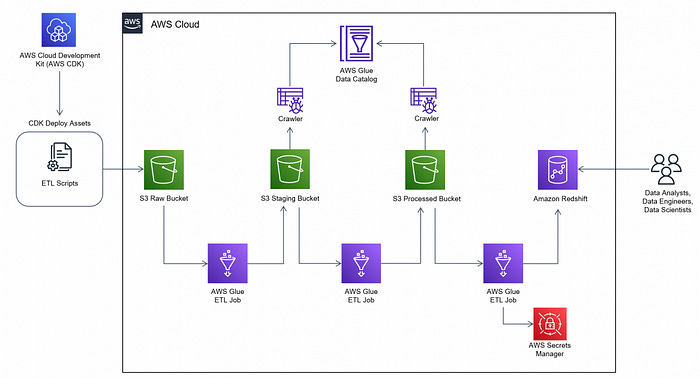

AWS Glue serves as the AWS tool for transforming data through ETL processes.

AWS Glue: AWS Glue is a fully managed ETL (Extract, Transform, Load) service provided by Amazon Web Services. It simplifies the process of preparing and loading data for analytics and data processing tasks. Here’s how AWS Glue facilitates ETL and data transformation in data engineering:

i. Data Catalog: AWS Glue provides a centralized metadata repository called the Data Catalog, which stores metadata about your data sources, transformations, and targets.

ii. ETL Jobs: AWS Glue allows you to define ETL jobs using a visual interface or code. ETL jobs can be scheduled to run at specified intervals or triggered by events such as data arrival or changes in data sources. You can define data transformations using a variety of programming languages such as Python or Scala, leveraging built-in transformations and custom logic.

iii. Schema Inference and Evolution: AWS Glue automatically infers schemas from your data sources during the crawling process. It supports schema evolution, allowing you to handle changes in data schemas over time without manual intervention.

iv. Data Cleaning and Transformation: AWS Glue provides built-in transformation functions and libraries for common data cleaning and transformation tasks. You can apply transformations such as filtering, aggregating, joining, and enriching data to prepare it for analysis or loading into target systems.

v. Integration with AWS Services: AWS Glue integrates seamlessly with other AWS services such as Amazon S3, Amazon RDS, Amazon Redshift, Amazon Athena, and Amazon EMR. You can use AWS Glue to extract data from various sources, transform it, and load it into data warehouses, data lakes, or analytical platforms.

vi. Serverless Architecture: AWS Glue is a serverless service, meaning you don’t need to provision or manage infrastructure. It automatically scales to handle varying workloads and provides cost-effective data processing without the need for manual scaling or capacity planning.

This marks the end of Part 2 in our series on “Full Potential of AWS to Elevate — Data Engineering Career”. In the upcoming posts, I’ll dive deeper into Analytics and Machine Learning & Artificial Intelligence services.

Stay tuned for a wealth of insights and tips on harnessing the full potential of AWS to elevate your data engineering career. Until then, keep exploring and innovating!